Field Robotics and the SpaceBot Cup

When we say that our main research goal is to increase the autonomy of mobile systems, we actually have to be more specific about the meaning of “autonomy”. Clearly, every autonomous system has to perceive its environment, extract and interpret relevant information, and make decisions about the next action. Still, there are huge differences between different “fully” autonomous systems regarding the boundary conditions of their operation. Let’s take self-driving cars as an example. Currently, a fully autonomous self-driving car requires highly precise digital maps, a given global position by GPS/GNSS, and highly precise sensors like 3D laserscanners to locate itself in the map. In contrast, a human driver (also fully autonomous) neither requires a map, nor a GPS signal, let alone a laserscanner just to drive and follow the road under every weather condition! Thus, a self-driving car is “guided” by an enormous effort in providing the external infrastructure, while a human driver uses his vastly superior perception capabilities and his internal model of how driving “works”. For us, increasing the autonomy means going from the “guided” autonomy of a self-driving car to the “full” autonomy of a human driver.

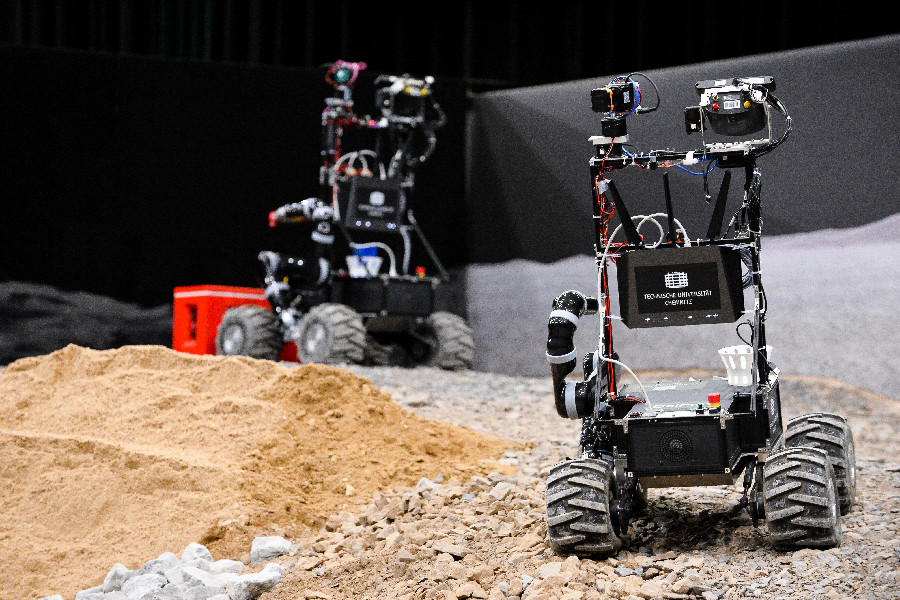

Translating this goal to mobile robotics means that our robots have to operate in an unstructured 3D-environment without a given map and without a given global position by GPS/GNSS or by external sensors like a motion capture system. These constraints fueled many of our research efforts, e.g. in robust SLAM, place recognition in changing environments, and in indoor MAVs. Thus, the announcement of the first SpaceBot Cup in 2013 fit perfectly into our research agenda. In 2015, there was a second SpaceBot Cup with the same basic tasks as described below. We participated in both events with a system of two identical robots (for cooperation as well as for fault-tolerance).

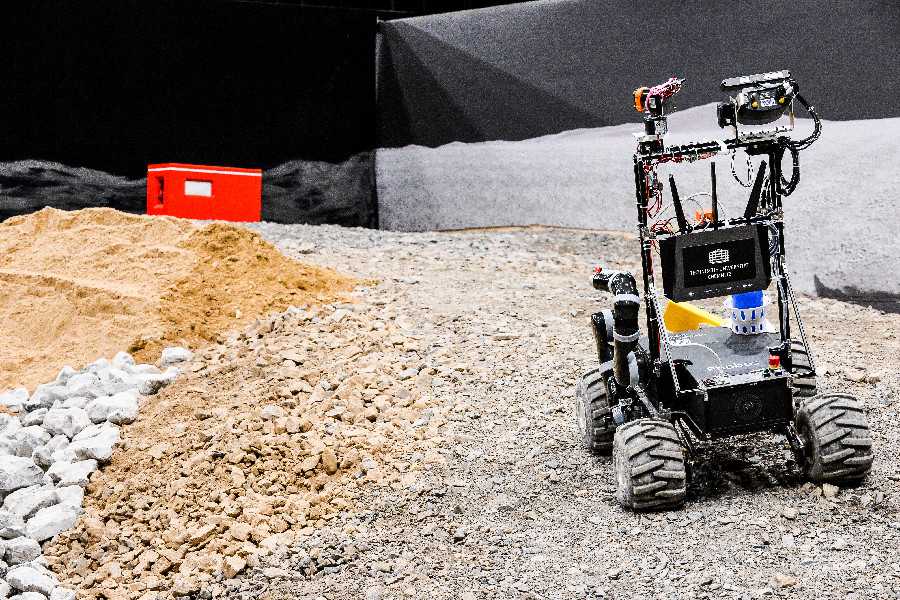

The goal of the SpaceBot Cup was to assess the current state of the art of autonomous robotics for planetary explorations. After an application period, ten German universities and research institutes were selected as participating teams. On a simulated planetary surface built inside a television studio, the task was to explore the environment, find, transport and assemble two objects on a base object, then drive back to the starting point. Each team had one run of 60 minutes to perform these tasks. The complete area is divided by a black barrier into two identical fields to allow for parallel runs.

A landing site marked the starting point for the robots where they had to begin with their exploration (obviously without GNSS), navigating around dangerous soil or slopes. At unknown locations, a “battery pack” (a yellow, rectangular cuboid) of 800 g weight and a blue cup had to be discovered. The battery pack had to be picked up and the mug could be used to take a soil sample. Both objects had to be transported to a red base object on a hill.

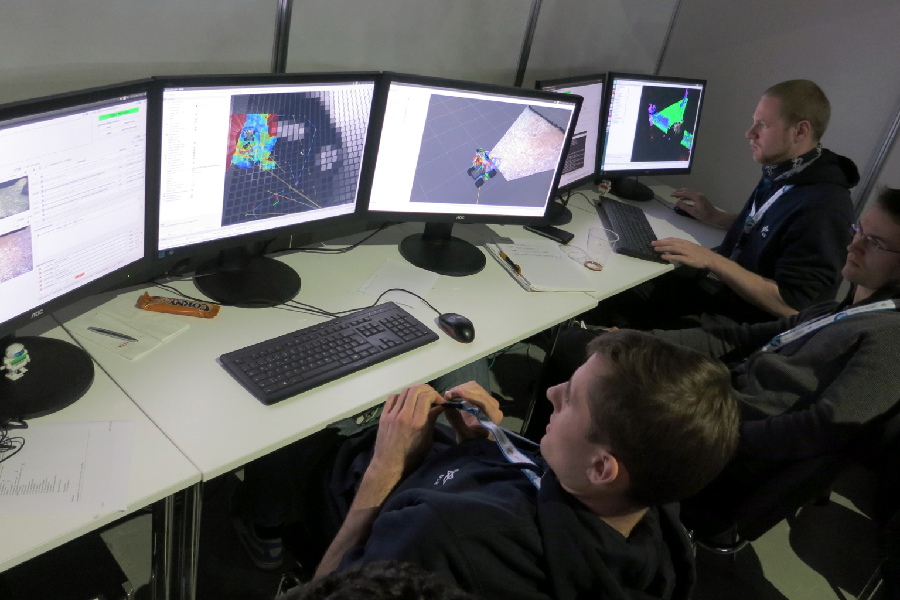

All operations should be performed fully autonomously. There was a simulated mission control center “on earth” where the operators could monitor the sensor date with a time delay. Within the 60 minutes of operation time were three time slots of 5 min each, where it was possible to send high level commands back to the robots on the “planet surface”.

After reaching the red base object, there was an assembly task with the battery pack and the cup. The cup with the soil sample had to be placed on the red object and the battery pack had to be inserted into a slot.

The cup with the soil sample was weighed by a scale in the base object. After the assembly was completed, the robots had to return to the landing site within the 60 minutes timeframe.

With these rules fully enforced, the SpaceBot Cup is the one of the most difficult robot competitions worldwide. Because of the problems that many teams had with the tasks, some rules were relaxed and since it was a showcase, there were no rankings of the teams. Of course, we also had difficulties completing all the tasks fully autonomously but overall were perceived to be among the best three teams of the competition.

After the official runs we had an opportunity to scan the whole arena without the dividing wall and the resulting map is based on fifteen 3D laserscans combined with ICP (Iterative Closest Point).

Here you can watch the official DLR video of the event with English subtitles:

More information and all the technical details can be found in these two papers describing our systems and lessons learned during the SpaceBot Cup 2013 and 2015:

- (2016) Two Autonomous Robots for the DLR SpaceBot Cup — Lessons Learned from 60 Minutes on the Moon. In Proc. of Intl. Symposium on Robotics (ISR)

- (2014) Phobos and Deimos on Mars — Two Autonomous Robots for the DLR SpaceBot Cup. In Proc. of Intl. Symposium on Artificial Intelligence, Robotics and Automation in Space (iSAIRAS)