Towards Autonomous Flight of a MAV in GNSS-denied Environments

At the chair of automation technology, we have been working on autonomous systems for more than 15 years (UGVs, airship/blimp, automated driving). In 2007 we started working with quadrotors by using 3 AscTec Hummingbirds shortly after they became available. From the beginning, our research has focused on fully autonomous systems in indoor/GPS-denied environments without external sensors or computation. Since this required additional on-board sensors and computing power we got an AscTec Pelican quadrotor in 2010 because of its higher payload. The results of several projects using these MAVs with additional sensors and modifications have been published since 2008, e.g. an autonomous landing procedure by using a camera and a self-made optical flow sensor or in 2011, one of the first autonomous indoor flights using a Kinect on the Pelican.

Motivation

Our research interests focus on enabling autonomous, mobile systems to be applicable in a variety of civil applications, mainly in the areas of emergency response, disaster control, and environmental monitoring. These scenarios require a high level of autonomy, reliability and general robustness from every robotic system, regardless of whether it operates on the ground or in the air. Especially in partly collapsed buildings, flying systems may have an advantage about mobile ground robots, if they can be operated in a robust and autonomous way.

Our solution

There are several problems on the way to a fully autonomous operation of a MAV. We startet our research by finding a simple solution to the autonomous landing procedure, which is a fundamental ability.

As the essential control of a MAV is based on an IMU-system. Hence, stable hovering over one place is only possible by additional sensor information, like GNSS-measurements in outdoor scenarios. Indoors, a natural step is to use optical flow as additional sensor information. In our case, we could use the downward looking camera, but this means processing the images with the embedded computer system and leads to additional delays for the cascaded position controller. Therefore, we decided to develop a custom microcontroller board using an ADNS-3080 optical flow sensor, as described below.

With the introduction of the kinect camera, a cost-effective and easy-usable 3D sensor became available to the robotic community. A natural step was to implement this sensor onto our MAV and go further towards fully autonomous flight, by using the sensor's depth and image information in a representative scenario, an autonomous corridor flight.

As the system increased in complexity, a better validation and evaluation approach was needed, so we built a simple tracking system for controller design and validation of sensor fusion algorithms.

Especially in the case of GNSS-denied environments without global position information, a good estimation of the MAV's state is essential. As the usual filter based approach has its drawbacks regarding linearization errors, handling of delayed measurements or possible inconsistencies, we built a solution upon a factor graph based optimization algorithm, described on a separate page.

We implemented our approach for sensor fusion in combination with other components for example in a simulation environment while participating in the European Robotics Challenges (EuRoC). There, we successfully participated in the first part. The difficulty was to solve state estimation, localization, mapping and planning for a simulated system with restricted on-board processing.

Autonomous Landing

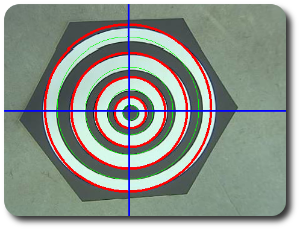

In areas with poor GNSS-signals or in GNSS-denied environments it is hard to accomplish accurate autonomous landing procedures, so we developed a simple camera-based system for precision landing. Based on a predefined landing pad, an image processing algorithm on-board the MAV provides relative position information for a subsequent control algorithm.

The OpenCV-based software on the embedded computer system receives the images from the onboard USB Logitech QuickCam 4000 and processes them. The results of this processing step are the estimated height above ground and the position in x- and y-direction of the UAV relative to the landing pad, projected to the ground plane. These estimates (especially the translation (x, y)) need to be corrected for the current nick and roll angles of the UAV. This is necessary because the camera is fixed on the frame of the UAV and is not tilt-compensated in any way. The initial position estimates (x, y, z) are corrected using the current nick and roll angles. These corrected position estimates are then used as inputs for a PID-controller that generates the necessary motion commands to keep the UAV steady above the center of the landing pad. This controller is again executed on the ATmega644P microcontroller.

Optical Flow for position stabilization

In GPS-denied environments, the only way to measure position and velocity is to integrate the information from the onboard acceleration sensors and gyros. However, due to the noisy input signals large errors accumulate quickly, rendering this procedure useless for any velocity or even position control. In our approach, an optical flow sensor facing the ground provides information on the current velocity and position of the UAV. The Avago ADNS-3080 we use is commonly found in optical mice and calculates its own movements based on optical flow information with high accuracy and a framerate of up to 6400 fps. After exchanging the optics and attaching a M12-mount lens with f = 4.2mm to the sensor, we are able to retrieve high quality position and velocity signals that accumulate only small errors during the flight.

A similar device for enabling a MAV with hovering capabilities by using optical flow was developed by the Pixhawk open-hardware project with their PX4FLOW camera. Later, the company parrot, published their solution within the AR.Drone, where optical flow is processed by an embedded computer system for assisted flight capabilities.

Autonomous flight using an RGB-D camera

Autonomous corridor following is performed using the Kinect sensor, the point cloud library (PCL) and ROS in combination with our own velocity, position and altitude controllers . The 3D point cloud from the Kinect sensor is pre-processed and searched for planes. Position and orientation of the UAV inside the corridor can be estimated from the plane parameters. A high-level controller then generates motion commands for the lower level controllers that keep the UAV on a trajectory in the middle of the corridor.

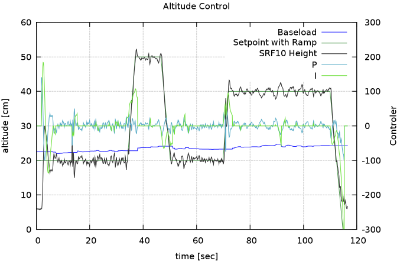

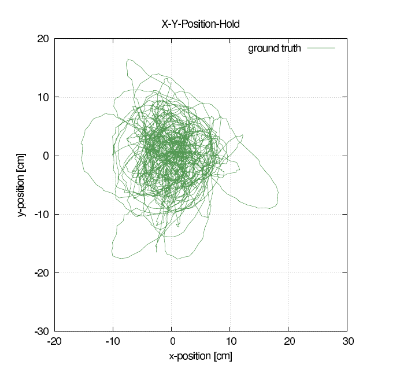

System identification, filter and controller design

One of our research projects focuses on enabling micro aerial vehicles to autonomously operate in GPS-denied environments, especially in indoor scenarios. Autonomous flight in confined spaces is a challenging task for UAVs and calls for accurate motion control as well as accurate environmental perception and modelling. The design of accurate motion control can only be achieved by knowing the specific system model and system parameters of the UAV at hand. Therefore, a global position and orientation measurement system is needed to realize a system identification and continue with further work in designing suitable control algorithms. Again, for evaluating the control performance, a ground truth measurement is needed.

We developed a cost-effective motion capture system similar to motion capture systems, for pose measurement of a robot. The robot is equipped with active markers consisting of high illuminating LEDs and a diffuser. These active markers can be detected with a single camera using image processing algorithms. By solving a three-point-perspective-pose estimation problem, we can calculate the system's pose. Although, the system is not as accurate and fast as commercially available motion capture systems, it proved useful for evaluation of state estimation algorithms or for controller design.

Additional information can be found in the corresponding publication: Cost-Efficient Mono-Camera Tracking System for a Multirotor UAV Aimed for Hardware-in-the-Loop Experiments

Publications

- (2013) Faktorgraph-basierte Sensordatenfusion zur Anwendung auf einem Quadrocopter. Dissertation: TU Chemnitz

- (2013) Incremental Smoothing vs. Filtering for Sensor Fusion on an Indoor UAV. In Proc. of Intl. Conf. on Robotics and Automation (ICRA), pages 1773-1778. DOI: 10.1109/ICRA.2013.6630810

- (2012) Autonomous Corridor Flight of a UAV Using a Low-Cost and Light-Weight RGB-D Camera. In Advances in Autonomous Mini Robots, pages 183-192, Springer Berlin Heidelberg. ISBN: 978-3-642-27481-7. DOI: 10.1007/978-3-642-27482-4_19

- (2012) Cost-Efficient Mono-Camera Tracking System for a Multirotor UAV Aimed for Hardware-in-the-Loop Experiments. In Proc. of Intl. Multi-Conference on Systems, Signals and Devices (SSD). DOI: 10.1109/SSD.2012.6198047

- (2012) Motion Estimation for Autonomous Quadrocopter Indoor Flight. In Proc. of Intl. Multi-Conference on Systems, Signals and Devices (SSD). DOI: 10.1109/SSD.2012.6198060

- (2010) Validating an Active Stereo System Using USARSim. In Simulation, Modeling, and Programming for Autonomous Robots, Vol. 6472:387-398, Springer Berlin / Heidelberg. DOI: 10.1007/978-3-642-17319-6_36

- (2010) Creating a Distributed Development Environment for Unmanned Aerial Vehicles using USARSim. In Proc. of the 55th International Scientific Colloquium, IWK 2010

- (2010) Active Stereo Vision for Autonomous Multirotor UAVs in Indoor Environments. In Proc. of the 11th Conference Towards Autonomous Robotic Systems (TAROS), pages 111-118. ISBN: 978-1-84102-263-5

- (2009) A Vision Based Onboard Approach for Landing and Position Control of an Autonomous Multirotor UAV in GPS-Denied Environments. In Proc. of Intl. Conf. on Advanced Robotics (ICAR)

- (2008) Autonomous Landing for a Multirotor UAV Using Vision. In Workshop Proc. of SIMPAR 2008 Intl. Conf. on Simulation, Modeling and Programming for Autonomous Robots, pages 482-491. ISBN: 978-88-95872-01-8