Camera-based Navigation in a 3D Map

In our work, we tried to answer the question how a camera can be localized within a given 3D map. This can be usefull for different reasons:

- Global Localization -- A given map enables a system to perform its localization within a global coordinate system. This is particularly suited in case of a robot swarm: each robot localizes itself within the global frame and concurrently determines its relative pose to all other robots.

- Cheaper / Simpler Robots -- An accurate localization in a point cloud can be performed well with a 3D laserscanner, however, this sensor type comes along with different disadvantages like high price, higher weight, partially slower frame rate, or more sophisticated mechanics. Accordingly, if a robot could only rely on a camera, it is simpler, cheaper and lighter; properties which are particularly beneficial for swarm robotics.

- Heterogenous Robot Teams -- Instead of a given 3D map, the map could be acquired during runtime by a single robot equipped with a 3D laserscanner. Concurrently, additonal robots solely equipped with a camera can localize themself within the acquired 3D map. This is advantageous as the 3D map defines the global coordinate system.

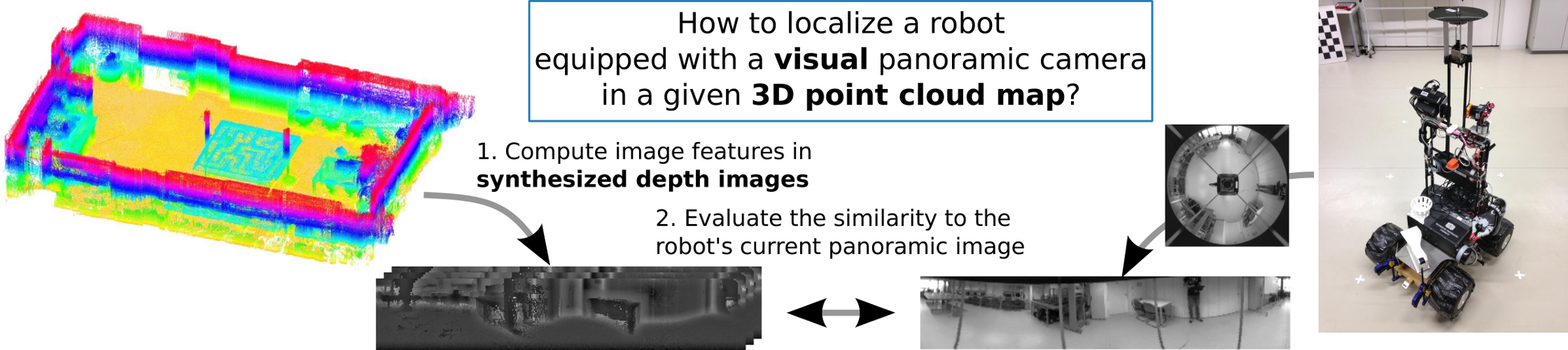

The basic idea is to imagine what a view looks like from an arbitrary pose (synthesize), and to compare a bunch of synthesized images with the actual current image. Subsequently, a similarity measure between camera image and synthetic images has to be performed to determine the actual pose of the camera. To increase its applicability, it is combined with a particle filter for a reduction of the required number of synthesized images.

We investigated to different approaches building upon two different types of map information (see below):

- Depth

- Semantics (to appear)

Depth

The proposed approach solely builds upon the depth information of a 3D map without the need for additonal sensor information like intensity or colour, which simplifies the mapping procedure. Accordingly, a 3D map could be acquired by a 3D laserscanner or even could be given as an extruded floor plan. The performed similarity measure between the synthetic and real images relies on the assumption that edges in a depth image are likely to appear in a camera image but not vice versa.

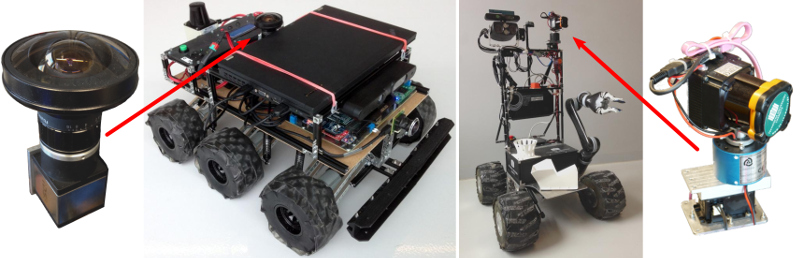

Panoramic images are acquired by omnidirectional cameras either with a camera facing onto a mirror or a fisheye camera, and environments are mapped with a custom-made 3D laserscanner. Both sensors are mounted on two different robots.

Datasets

For evaluation, we build upon different datasets:

- Synthetic City Center (generated from the "Multi-FoV" synthetic datasets)

- Lab

- Corridor

Publications

- (2017) Sampling-based Methods for Visual Navigation in 3D Maps by Synthesizing Depth Images. In Proc. of Intl. Conf. on Intelligent Robots and Systems (IROS). DOI: 10.1109/IROS.2017.8206067

- (2017) Synthesized semantic views for mobile robot localization. In Proc. of European Conf. on Mobile Robotics (ECMR). DOI: 10.1109/ECMR.2017.8098662

- (2017) Towards camera based navigation in 3D maps by synthesizing depth images. In Proc. of Towards Autonomous Robotic Systems (TAROS). DOI: 10.1007/978-3-319-64107-2_49. Best Paper Award Winner