Environment Perception with 3D Laserscanner

-

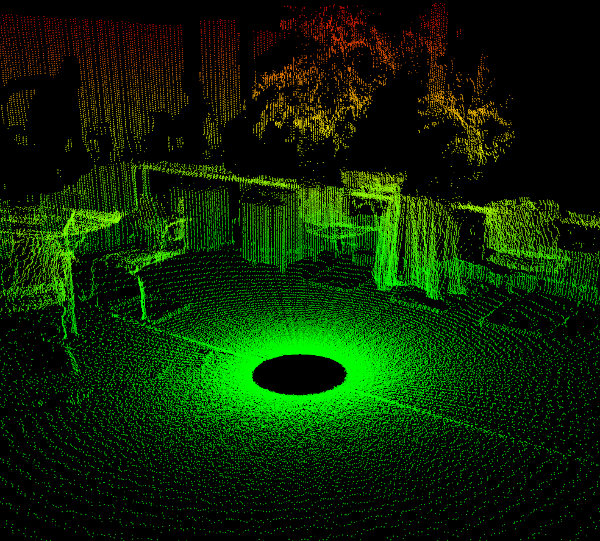

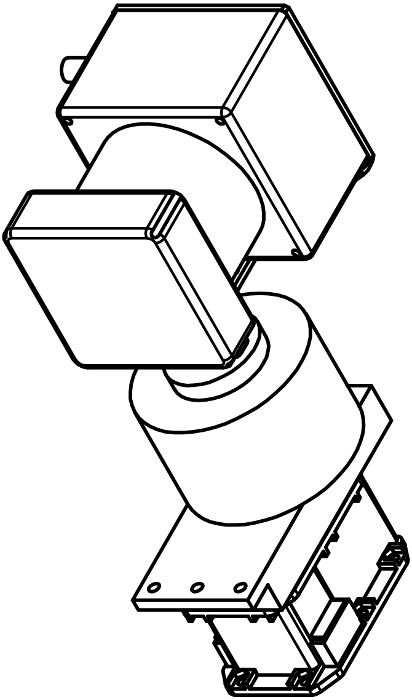

Laser scanners like the right depicted Hokuyo UTM-30LX are sensors which can measure the distance to an obstacle with the time of flight of a laser impulse. The use of such sensors is very popular in mobile robotics. The Hokuyo UTM-30LX measures distances up to 30m in a 2d plane within a 270° angle with a resolution of 0.25°. This 2d measurement area is often sufficient in an indoor environment with a plane ground. However, in rough terrain a three dimensional environment perception is mandatory. In order to obtain a 3D scan a 2D laser scanner can be mounted on a rotational element like a servomotor. So, the 2D plane can be rotated with known rotation. These data can be computed into a 3D point cloud. In the following image you can see a 3D laser scan of a room with a couple of chairs, two desks, a jacket and plants.

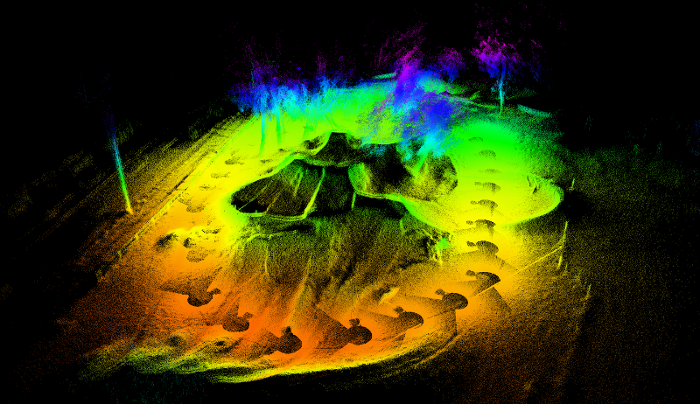

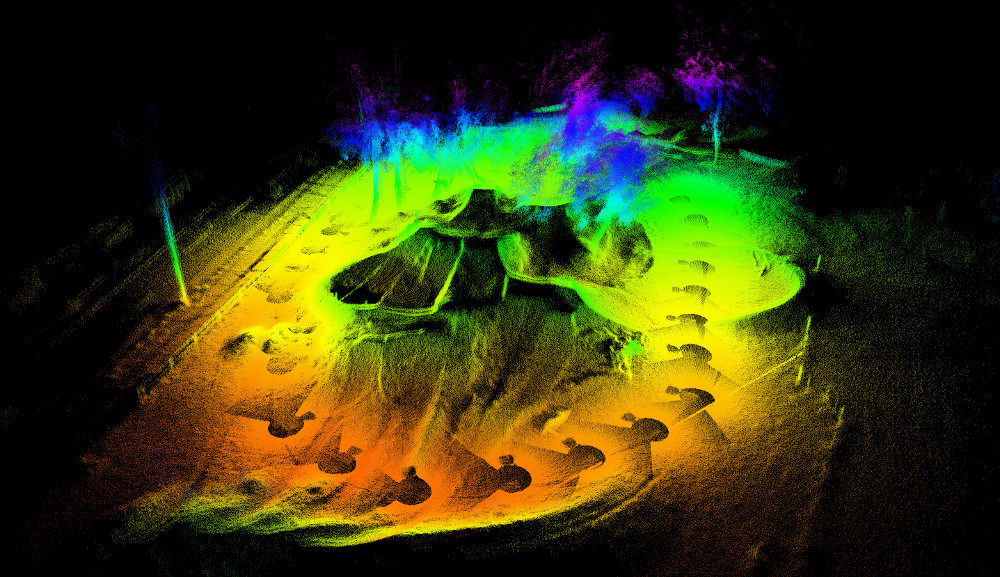

This 3D point cloud can be used for semantic interpretation or drivability assessment. In addition, scan matching algorithms can determine the transformation between two adjacent point clouds. This means the algorithm localizes the laser scanner within the previous point cloud. Furthermore, the computed transformation and scan data can be combined in order to receive a map of the environment. This simultaneous localization and mapping is known as SLAM. The following image was an experiment carried out by the chair of automation technology. It shows small hills, trees, a light pole, a handrail and some wooden posts.

The whole software is implemented in C++ within ROS (Robot Operating System). ROS is not a real operating system and runs with Linux. However, it adopts some operating system functions like process management, interprocess communication and hardware abstraction.

SpaceBot Camp 2015

Construction Site

Cross Track

Mechanical Drawing

Alignment Optimization Matlab Toolbox

Please contact the author.

(2016) How to Build and Customize a High-Resolution 3D Laserscanner Using Off-the-shelf Components. In Proc. of Towards Autonomous Robotic Systems (TAROS). DOI: 10.1007/978-3-319-40379-3_33. Best Paper Award Winner