Visual Imitation Learning with Stability Guarantees

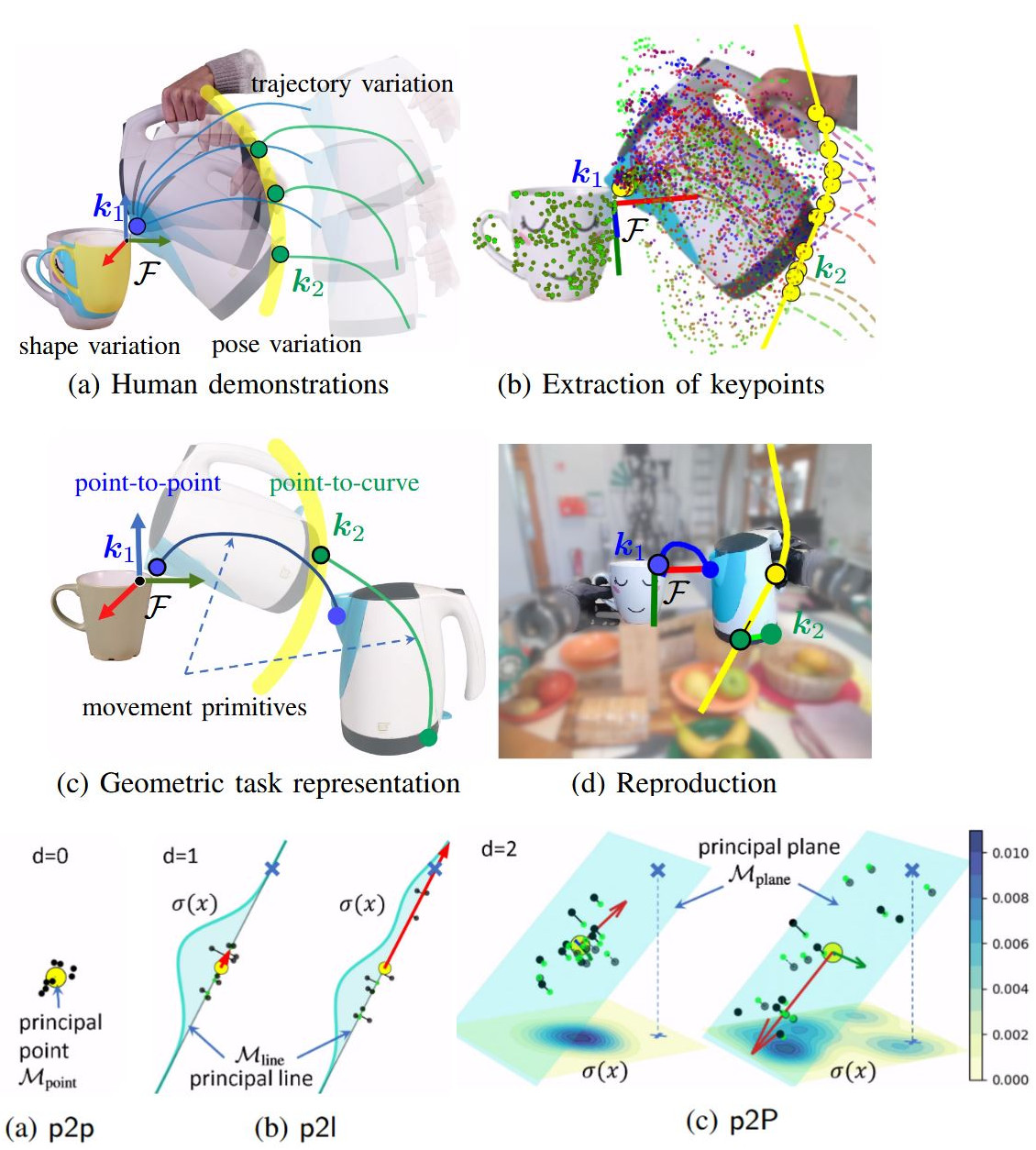

Imitation learning is a powerful paradigm, which enables robots to learn new movement skills either by kinesthetic or visual demonstration. Recently, a new method has been proposed that leverages geometric object interaction primitives to model new movement skills in an object-centric manner, providing new levels of flexibility in general [1]. However, this method relies on an empirically tuned admittance controller and probabilistic movement primitives, that do not provide stability guarantees, raising questions of safety and predictability.

An alternative to movement primitives are dynamical system - based movement policies that recently have been integrated with deep neural networks. These provide a-priori stability guarantees by design [2]. The goal of this thesis would be to integrate these policies with keypoint-based imitation learning and to evaluate the resulting model on a real robotic system.

[1] Gao, Jianfeng, et al. "K-vil: Keypoints-based visual imitation learning." IEEE Transactions on Robotics 39.5 (2023): 3888-3908.[2] Totsila, Dionis, et al. "Sensorimotor Learning With Stability Guarantees via Autonomous Neural Dynamic Policies." IEEE Robotics and Automation Letters (2025).

Advisor:

- Lucas Schwarz, lucas.schwarz@…

Requirements:

- Strong mathematical foundations.

- Basic knowledge in control theory, robotics, optimization & perception.

- Machine Learning fundamentals.

Keypoints and object interaction primitives

Keypoints and object interaction primitives