Research Area Perception

|

Object Pose Estimation

|

3D Reconstruction and Next Best View Planning

|

|

|

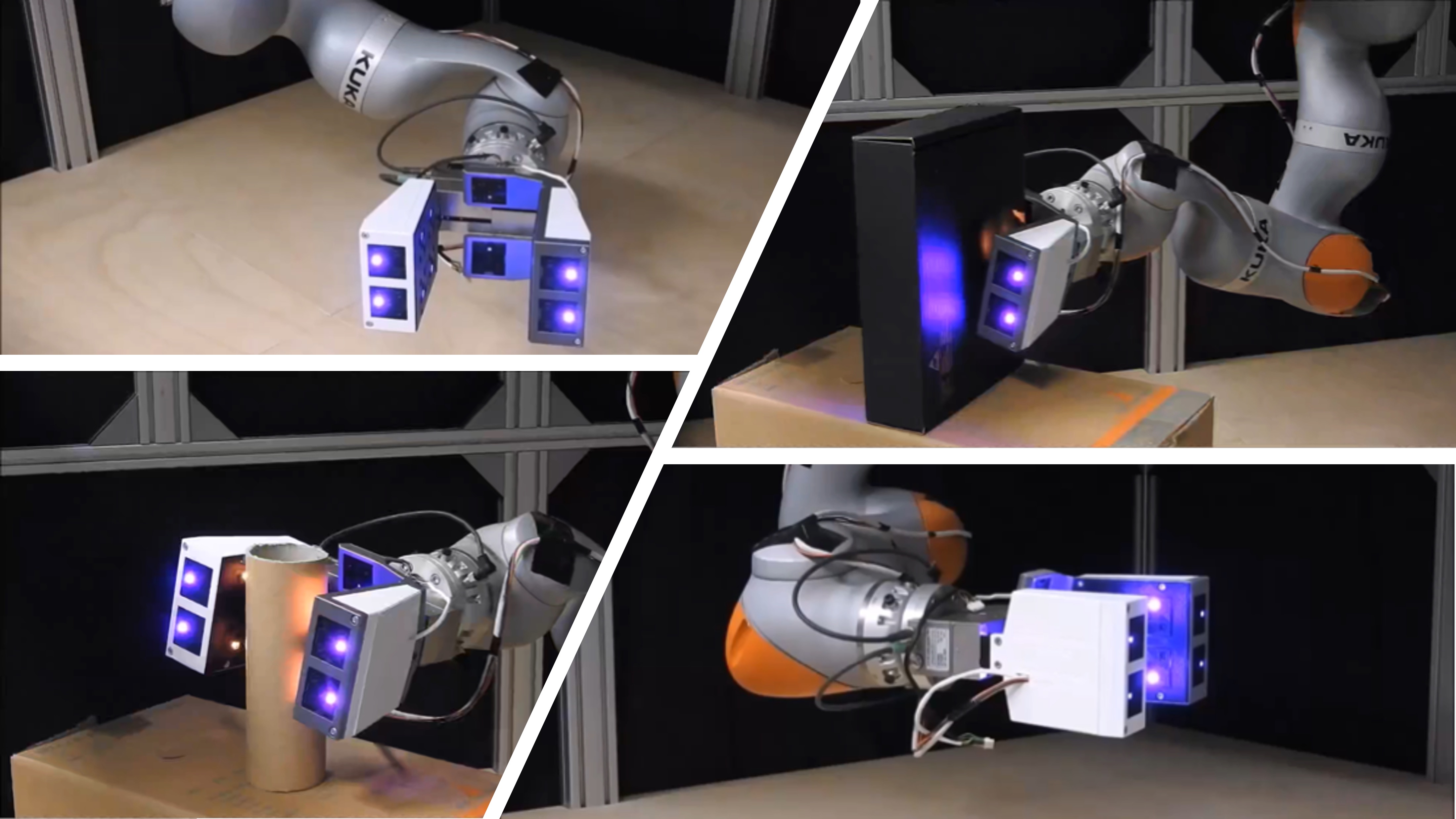

Bin Picking

|

Eye Gaze Tracking and Action Recognition

|

|

Hand and Object Pose Tracking

|

|

New Sensors

|

|

|

Environmental Perception

|

| Contact | Prof. Dr.-Ing. Ulrike Thomas |

| Funded by |   |