Multistable perception across modalities

Resolving sensory ambiguity in vision and audition

A joint project by the Cognitive Systems Lab and the Physics of Cognition Group, funded by the German Research Foundation.

Our sensory systems continuously infer a unique perceptual interpretation from ambiguous sensory data. Multistable perception provides experimental access to understand this process. While multistable perception has been well-studied in individual sensory modalities, research on the interaction among the modalities is scarce. Our research aims at closing this gap. What are the commonalities between auditory and visual multistability? Do the same principles underlie the resolution of sensory ambiguity? Do the underlying mechanisms overlap? To answer these questions, we use state-of-the-art methods to objectively measure apparently subjective perceptual states. We expect that our research will yield a better understanding of cross-modal interactions between the sensory modalities, which is highly relevant in real-life situations.

more information | team | project-related publications

Examples of multistability

|

|

|

|

|

|

| pattern/ component |

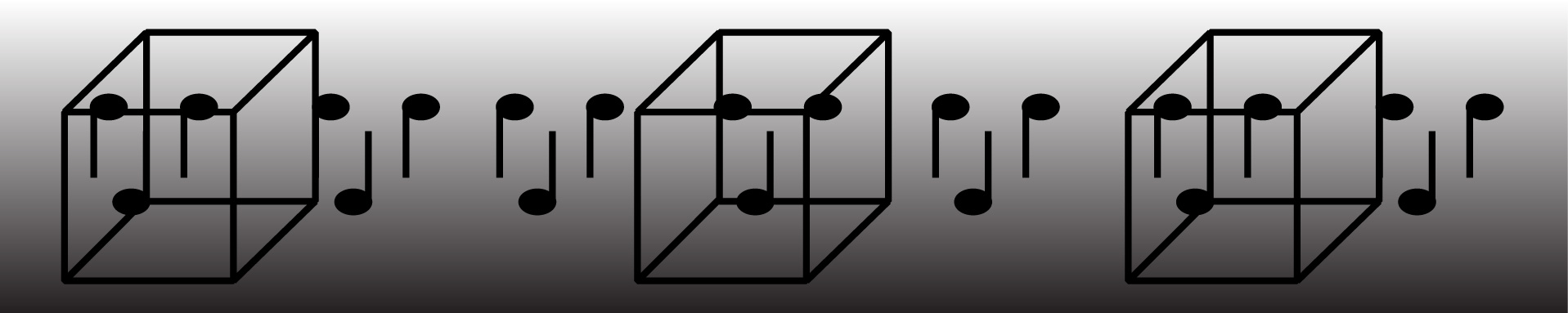

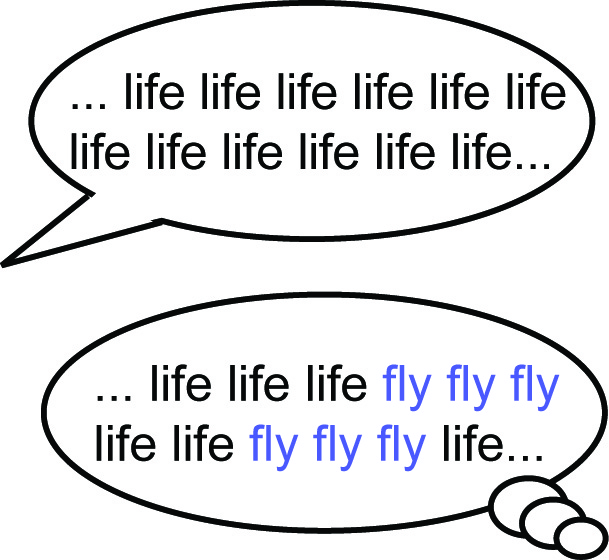

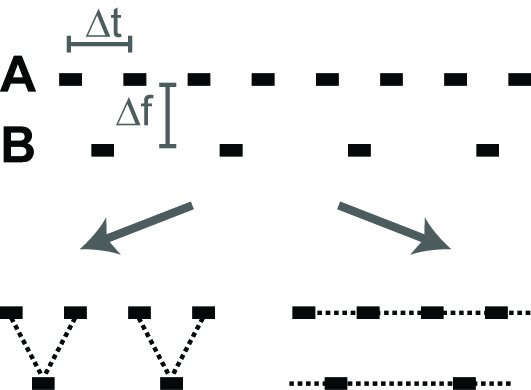

object emergence | structure from motion | apparent motion | verbal trans-formations | auditory streaming |