Forschungsseminar

Das Forschungsseminar richtet sich an interessierte Studierende des Master- oder Bachelorstudiums. Andere Interessenten sind jedoch jederzeit herzlich willkommen! Die vortragenden Studenten und Mitarbeiter der Professur KI stellen aktuelle forschungsorientierte Themen vor. Vorträge werden in der Regel in Englisch gehalten. Den genauen Termin einzelner Veranstaltungen entnehmen Sie bitte den Ankündigungen auf dieser Seite.

Informationen für Bachelor- und Masterstudenten

Die im Studium enthaltenen Seminarvorträge (das "Hauptseminar" im Studiengang Bachelor-IF/AIF bzw. das "Forschungsseminar" im Master) können im Rahmen dieser Veranstaltung durchgeführt werden. Beide Lehrveranstaltungen (Bachelor-Hauptseminar und Master-Forschungsseminar) haben das Ziel, dass die Teilnehmer selbstständig forschungsrelevantes Wissen erarbeiten und es anschließend im Rahmen eines Vortrages präsentieren. Von den Kandidaten wird ausreichendes Hintergrundwissen erwartet, das in der Regel durch die Teilnahme an den Vorlesungen Neurocomputing (ehem. Maschinelles Lernen) oder Neurokognition (I+II) erworben wird. Die Forschungsthemen stammen typischerweise aus den Bereichen Künstliche Intelligenz, Neurocomputing, Deep Reinforcement Learning, Neurokognition, Neurorobotische und intelligente Agenten in der virtuellen Realität. Andere Themenvorschläge sind aber ebenso herzlich willkommen!Das Seminar wird nach individueller Absprache durchgeführt. Interessierte Studenten können unverbindlich Prof. Hamker kontaktieren, wenn sie ein Interesse haben, bei uns eine der beiden Seminarveranstaltungen abzulegen.

Vergangene Veranstaltungen

Biologisch Inspirierte Active VisionJan Chevalier Tue, 17. 2. 2026, A10.367 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Das aktive Sehen bei Robotern kann durch biologisch inspirierte Ansätze gelöst werden. Es werden 2 verschiedene Ansätze für das selbständige Zurechtfinden eines Roboters vorgestellt. Einmal ein Modell, welches sich selbst ein internes Modell der Umwelt macht ("A Hierarchical System for a Distributed Representation of the Peripersonal Space of a Humanoid Robot", M. Antonelli, 2014). Außerdem ein Modell, welches an 3 verschieden Roboterköpfen getestet wurde ("A Portable Bio-Inspired Architecture for Efficient Robotic Vergence Control", A. Gibaldi, 2017). Des Weiteren wird ein Ansatz der biologisch inspirierten Objekterkennung vorgestellt ("Active Vision : on the relevance of a Bio-inspired approach for object detection", K. Hoang, 2019). |

Exploring Reinforcement learning strategies in automated wheel truingToni Rozsahegyi Tue, 17. 2. 2026, A10.367 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Spoked bicycle wheel truing, correcting rim deviations by adjusting spoke tensions, presents a challenging testbed for reinforcement learning due to its high-dimensional state space, non-local action effects, and multi-objective constraints. The central question is whether RL agents can learn effective truing policies in simulation, comparing value-based (Rainbow DQN), policy-gradient (PPO), and model-based (TD-MPC2) approaches across different state representations, action spaces, and reward functions. A systematic ablation study reveals substantial performance differences across these design choices, with some results challenging conventional intuitions about state representation and action space formulation. The insights from these experiments inform the selection and optimization of a candidate policy for future sim-to-real transfer to physical truing machines. |

Part 2: Why did I drive to work on my day off: Perspectives on goal-directed and habitual behavior with implications for future AI systemsErik Syniawa Tue, 3. 2. 2026, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Although modern AI systems excel at complex tasks such as language generation, they lack the adaptive efficiency that characterizes human behavior. Humans seamlessly balance deliberate planning with automatic responses depending on context and computational demands. In this talk we will discuss recent frameworks suggesting that goal-directed and habitual control are integrated rather than separate systems. Butz et al. (2025) propose a computational account where context inference optimizes cognitive effort, while Hamker et al. (2025) propose an anatomical perspective through interacting cortico-basal ganglia loops and learned shortcuts. I will outline the potential synergies and incompatibilities that could arise in integrating these frameworks. Finally, I will discuss how principles from these frameworks particularly context integration and shortcuts could potentially inspire more efficient AI architectures and how these principles relate to already published AI approaches. |

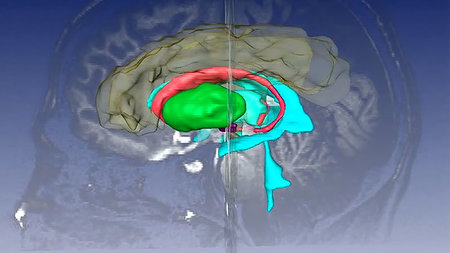

Interacting cortico - basal ganglia - thalamocortical loops in behavioral controlFred Hamker Tue, 27. 1. 2026, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw I will present a new framework explaining how the brain controls goal-directed behavior, habits, and their learning processes. I propose that goal selection is implemented through the limbic loop, which connects the hippocampus to the ventral basal ganglia. This loop retrieves goal plans from memory, allowing the brain to 'virtualize' potential outcomes based on prior experiences. Once a goal is selected, plan execution is carried out by other, hierarchically organized cortico-basal ganglia circuits, with lower-level loops being guided by objectives set by higher-level ones. I will also present findings from a neuro-computational model applied to a well-known two-stage decision-making task. Our results highlight the central role of hippocampal replay in learning and behavior, particularly in distinguishing between model-based and model-free learning strategies. |

Explaining the Interaction Between Criticality and Evolutionary Success in Recurrent Neural Network Models for Dynamical ControlIdris Hafsa Tue, 20. 1. 2026, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Neural networks operating in real-world environments are often exposed to noisy and unpredictable inputs, which can lead to unstable behavior. This work investigates how the dynamical regime of recurrent neural networks influences their robustness under such perturbations, with a focus on the concept of criticality. Recurrent networks were trained in a controlled environment and subjected to external disturbances to evaluate their stability and performance. The results indicate that classical indicators of criticality do not provide a reliable predictor of robustness or performance. Instead, performance is strongly shaped by emergent behavioral strategies and task-specific dynamics, highlighting the need for alternative approaches to studying stability and robustness in recurrent neural systems. |

Why did I drive to work on my day off: Perspectives on goal-directed and habitual behavior with implications for future AI systemsErik Syniawa Tue, 16. 12. 2025, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Although modern AI systems excel at complex tasks such as language generation, they lack the adaptive efficiency that characterizes human behavior. Humans seamlessly balance deliberate planning with automatic responses depending on context and computational demands. In this talk we will discuss recent frameworks suggesting that goal-directed and habitual control are integrated rather than separate systems. Butz et al. (2025) propose a computational account where context inference optimizes cognitive effort, while Hamker et al. (2025) propose an anatomical perspective through interacting cortico-basal ganglia loops and learned shortcuts. I will outline the potential synergies and incompatibilities that could arise in integrating these frameworks. Finally, I will discuss how principles from these frameworks particularly context integration and shortcuts could potentially inspire more efficient AI architectures and how these principles relate to already published AI approaches. |

Perisaccadic attentional updating in Area V4: A neurocomputational approachJulia Bergelt Tue, 18. 11. 2025, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Psychophysical studies with human subjects investigated the dynamics of spatial visual attention around the time of saccades. They revealed that attention pointers remap against saccade direction, but at the same time show a lingering at the irrelevant retinotopic position after saccade. Involved brain areas have remained elusive. However, recordings from neurons in visual area V4 of macaques, obtained from a novel experimental design, con rm remapping of spatial attention to a position opposite to the saccade direction shortly before saccade onset (Marino & Mazer, 2018). Unexpectedly, in comparison with behavioral data from human subjects, a neural correlate of lingering of spatial visual attention has not been observed in V4. To better understand the underlying computational mechanisms of attentional updating in V4, we developed a neurocomputational model of perisaccadic visual attention in area V4 by incorporating perisaccadic spatio-temporal signals from the frontal eye field (FEF) and the lateral intraparietal area (LIP). When we test this model on the same task, our obtained data replicates the observation of predictive remapping of spatial attention pointers in V4. Further, our model provides an intuitive explanation for the lack of lingering attention in the neural recordings: As the attended location is only cued during instruction trials, endogenous attention is required, which might have faded away due to a continuous stream of visual input in each trial. By deactivating different pathways in the neurocomputational model, our model predicts that the attentional enhancement measured in V4 originates from spatial updating and not from a saccade target attention pointer. |

Exploring Deep Learning Approaches for 3D Deformation: Toward Finite Element Method DistillationAida Farahani Tue, 11. 11. 2025, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Thesis test presentation: The thesis introduces neural frameworks for modeling 3D deformations with the goal of enhancing the predictive accuracy and computational efficiency of traditional Finite Element Method (FEM) simulations. It explores two complementary approaches: a single-step deformation model that employs implicit neural representations and signed distance fields to approximate FEM-based deformations up to 400× faster than conventional simulations, and a multi-step deformation model that formulates deformation as a sequential decision-making process within a deep reinforcement learning framework. Supported by two custom datasets, DefBeam and DefCube, the study demonstrates that AI-driven methods can effectively complement FEM by accelerating simulations and improving accuracy in applications such as material design, virtual prototyping, and industrial forming. |

Bridging Conflicting Views on Eye Position Signals: A Neurocomputational Approach to Perisaccadic PerceptionNikolai Stocks Tue, 4. 11. 2025, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Saccades are an integral component of visual perception, yet the accuracy and role of eye position signals in the brain remain unclear. The classical model of perisaccadic perception posits that the dorsal visual system combines an imperfect eye position signal with visual input, leading to systematic perisaccadic mislocalizations under specific experimental conditions. However, neurophysiological studies of eye position information have produced seemingly conflicting results. One team of researchers observed the eye position signal directly in gain-field neurons in the lateral intraparietal area (LIP) and found them incompatible with the classical model. In contrast, another team reported evidence for an eye position signal consistent with the classical model, even showing that accurate eye position can be decoded from neural activity. We modeled two subpopulations of neurons in LIP receiving input from two different sources, one representing the corollary discharge containing predictive presaccadic signals, the other representing a slowly updating proprioceptive eye position signal. By decoding eye position from the neural activity of these subpopulations, we observed the model containing sufficient information to allow the decoder to accurately predict and track the perisaccadic eye position. Our findings reconcile the apparent contradiction between the different neurophysiological studies by providing a unified framework for understanding eye position signals in perisaccadic perception. Our results suggest that a combination of a late-updating proprioceptive signal and a predictive corollary discharge is sufficient for accurately decoding eye position. |

Interacting corticobasal ganglia-thalamocortical loops shape behavioral control through cognitive maps and shortcutsFred Hamker Tue, 28. 10. 2025, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Control of behavior is often explained in terms of a dichotomy, with distinct neural circuits underlying goal-directed and habitual control, yet accumulating evidence suggests these processes are deeply intertwined. We propose a novel anatomically informed cognitive framework, motivated by interacting corticobasal ganglia-thalamocortical loops as observed in different mammals. The framework shifts the perspective from a strict dichotomy toward a continuous, integrated network where behavior emerges dynamically from interacting circuits. Decisions within each loop contribute contextual information, which is integrated with goal-related signals in the basal ganglia input, building a network of dependencies. Loop-bypassing shortcuts facilitate habit formation. Striatal integration hubs may function analogously to attention mechanisms in Transformer neural networks, a parallel we explore to clarify how a variety of behaviors can emerge from an integrated network. |

Evaluating Speculative Decoding in Large Language Models: A Comparative Study of MEDUSA and EAGLE Architectures on Hallucination RisksAlaa Alshroukh Tue, 21. 10. 2025, A10.208.1 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Large Language Models (LLMs) deliver strong performance on tasks such as summarization and question answering, but autoregressive decoding remains a latency bottleneck in real-time applications due to its sequential, compute-intensive nature. This bottleneck arises in part because each decoding step requires transferring the entire set of model parameters from high-bandwidth memory (HBM) to the accelerator's cache, which limits throughput and underutilizes computational resources. To address this challenge, two acceleration methods are examined: EAGLE (Extrapolation Algorithm for Greater Language-model Efficiency) - a speculative sampling framework, and MEDUSA - a multi-head inference approach. Both techniques reduce latency by generating multiple tokens per step. Performance is evaluated by comparing each accelerated model to its baseline on summarization tasks, enabling controlled within-model analyses of speed and output quality. Particular attention is given to hallucination - fluent yet factually unsupported output - since generating multiple tokens at once can amplify small inaccuracies into extended errors. Results indicate a clear speed - factuality trade-off: EAGLE achieves the lowest latency and highest throughput while broadly preserving source meaning, but exhibits reduced factual stability on dense inputs. Medusa is slower but demonstrates stronger adherence to the source and more consistent factuality. Overall, the findings emphasize that accelerated LLM decoding requires careful balancing of efficiency and reliability, especially in fact-sensitive applications. |

Part 2: Key Concepts from the Nengo Summer School 2025Erik Syniawa Tue, 14. 10. 2025, A10.208.1 This talk presents key concepts from the Nengo Summer School, focusing on the Neural Engineering Framework (NEF) and its implementation through Nengo. The NEF provides three core principles: representation (how populations encode information), computation (how connections compute functions), and dynamics (how recurrent networks implement differential equations). These principles enable the construction of large-scale spiking neural networks for cognitive modeling (SPAUN). The talk covers the Semantic Pointer Architecture (SPA), a cognitive architecture that uses compressed neural representations to connect low-level neural activity with high-level symbolic reasoning. It also examines Legendre Memory Units (LMUs), an efficient method for processing temporal information in neural networks. Additionally, I will present my project work: a biologically inspired architecture that combines a visual pathway with the basal ganglia to play different Atari games. |

Erweiterung eines Aufmerksamkeitsmodells um eine gelernte IT-SchichtLucas Berthold Thu, 11. 9. 2025, 1/336 and https://webroom.hrz.tu-chemnitz.de/gl/mic-cv7-ptw Es gehört noch zu den zentralen offenen Fragen der Neurowissenschaften, warum die Repräsentationen von Objekten im visuellen System des Menschen stabil bleiben, auch wenn sich die Augen ständig bewegen. Es wird angenommen, dass der inferotemporale Kortex (IT) eine entscheidende Rolle bei der Objekterkennung spielt, indem dort Objekte in Form von stabilen Repräsentationen kodiert werden (Li und DiCarlo, 2008). Trotz der Robustheit dieser Repräsentationen gegenüber Veränderungen der Position eines Objekts auf der Retina haben Li und DiCarlo (2008) gezeigt, dass durch gezielte Manipulation an einer bestimmten retinalen Position einzelne IT-Neuronen, die zuvor selektiv auf bestimmte Objekte reagierten, nach der Manipulation an Selektivität verloren haben. Die zentrale Frage dieser Arbeit ist, ob sich die von Li und DiCarlo (2008) beobachteten Effekte auch in einem künstlichen neuronalen Modell nachbilden lassen, wenn allein die Verbindungen eines höheren visuellen Areals von einem bestehenden Aufmerksamkeitsmodell (Beuth, 2019) zu einer IT-Schicht, unter Berücksichtigung von lateraler Inhibition, gelernt werden. Dazu ist erforderlich, dass die IT-Neuronen sowohl selektiv für bestimmte Objekte als auch positionsinvariant sind. Die Ergebnisse der Arbeit zeigen, dass die Neuronen der gelernten IT-Schicht objektspezifisch und positionsinvariant selektieren können. Die Beobachtungen aus dem Manipulationsexperiment von Li und DiCarlo (2008) konnten jedoch nicht repliziert werden. |