Hausprojekt: ZYFPOR - Zynq Flexible Platform for Object Recognition

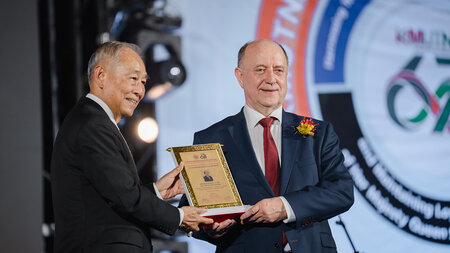

git repository of the ZYFPOR-project - winner of the Xilinx Open Hardware Design Contest 2015 in the Zynq category

Content

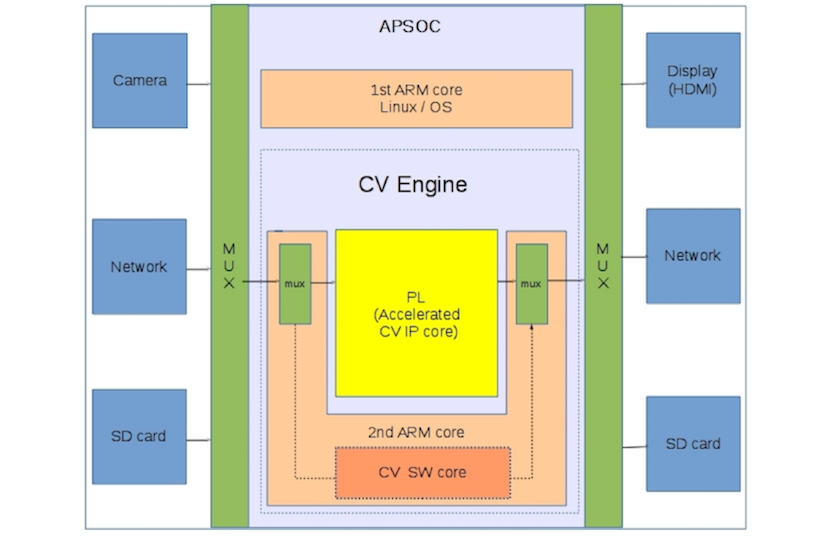

This project makes use of the ZYNQ-7000 All Programmable SoC for flexible Object recognition applications by realizing a scalable hardware and open source software. The aim is to test the capabilities and limitations of the embedded processor core and the programmable logic for computer vision applications as well as to acquire knowledge about object recognition and detection. The area of indoor mapping and localization is here the desired use case.

The design is intended to use the capabilities of the ZedBoard by combining the programmable logic (PL) and the processing system (PS) to leverage the processing of the computer vision algorithms. The ZedBoard consists of a dual ARM Cortex A9 processor whereas one core runs the operating system while the other core is dedicated for the video processing. The video source is selectable between a USB camera device, Ethernet stream or a video file located on a SD card. The video stream is accessible by both hardware and software for processing by routing to the shared memory through the PS. Hence the processing of the captured image can be done either in hardware, software or a combination of both. The result may be displayed using a HDMI device or routed via Ethernet. The HDMI interface is build using the ADV7511 reference design and is integrated into the PL. For implementing the computer vision algorithms, the OpenCV libraries have been used for the PS part, whereas the PL part was realized by taking advantage of the Xilinx Vivado HLS Video Library. Edge detection algorithms (Sobel, Canny) as well as feature description and extraction (Oriented BRIEF) have been implemented and tested using a floor at the Chemnitz University of Technology to detect door handles.

Board used: ZedBoard

Vivado Version: 2014.4

The repository consists of the following directories:

- /APSOC_CV - linux program with CMake build

- /image - location of the boot files, necessary for Partition 1 of the sd card

- /src - contains the source files of the linux program and the Vivado HLS project (C sources)

- --> /src/linux_program - source and header files of the linux program

- --> /src/vivado_hls - C sources of the image filter

- /vivado_hls_project/zyfpor_ip - ZYFPOR Vivado HLS project

- /vivado_project - contains a tar.xz archive of the Vivado Project

Instructions to build and test project

Hardware

The Vivado and Vivado HLS projects can be opened using the respective Xilinx tools (Vivado and Vivado High Level Synthesis).

Software

- Step 1: Copy the files located in /image/ to partition 1 of the sd card for the ZedBoard. *--> There must be a running linux distribution (e.g. Raspbian, Arch Linux ARM etc.) with a desktop environment on partition 2.

- Step 2: Copy the directory /APSOC_CV e.g. to the home directory of the running linux on partition 2 of the sd card.

- --> The OpenCV Library and CMake must be installed on the linux.

- Step 3: Using a terminal, change into APSOC folder.

- Step 4: Typing make will call a script building the application.

- Step 5: Launch the application with typing "sudo ./APSOC_CV"

- --> The software needs sudo rights for accessing the PL.

- Step 6: The menu of the application will show up. Typing "b" with hitting "Enter" will call the desired application.

- --> The other functions should work as well, whereas choosing to use an application that needs a video file

- --> expects a file under "../../testimages/sequences_a5100/00001_half.avi"

- --> Changing the corresponding line in the file "apsoc_cv_parameters.h" will allow to open other located videos with a different name.

- --> The resolution of the video should be 640x480 as can be seen in the mentioned file.

Short explanation of the files of the software application

- apsoc_cv_apps.cpp - contains all software application like image grabbing from a webcam or the image detection function

- apsoc_cv_apps.h - the corresponding header file

- apsoc_cv_camera.cpp - contains functions for accessing the webcam

- apsoc_cv_camera.h - function prototypes

- apsoc_cv_filter.cpp - contains functions for accessing the image processing IP core

- apsoc_cv_filter.h - function prototypes and struct declaration

- apsoc_cv_main.cpp - the main function of the application, handles the user input

- apsoc_cv_parameters.h - stores all necessary parameters for the applications like resolution of input video or video file location

- apsoc_cv_vdma.cpp - contains functions for controlling the VDMA

- apsoc_cv_vdma.h - function prototypes and vdma register addresses/offsets

- ximage_filter_hw.h - image processing IP core addresses

- ap_fixed_sim.h; ap_int.h; ap_int_sim.h; ap_private.h --> Header files for fixed-point datatype, which is used to access the data provided by the Hough Line Transform processed in the PL of the ZYNQ.

Video

Results

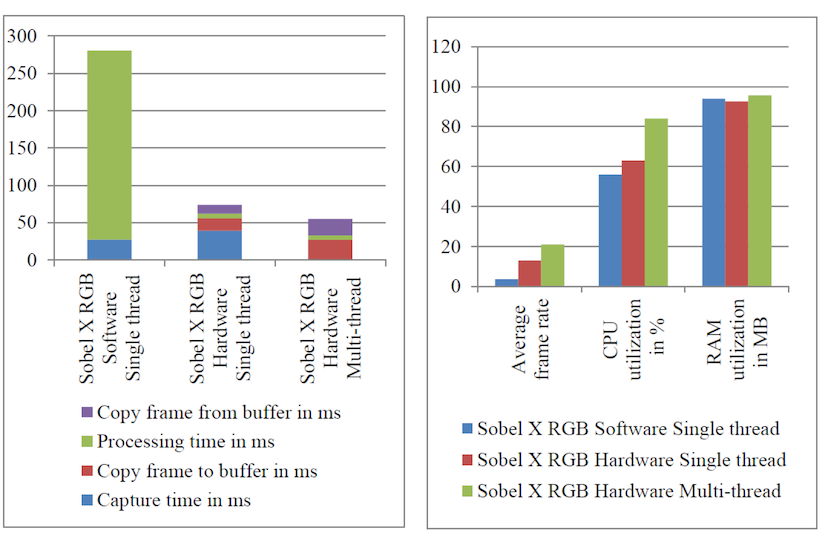

All Programmable SoCs with its flexible hardware configuration, provides a platform for versatile applications. The Artix-7 FPGA fabric and ARM Cortex A9 cores of the ZYNQ-7000 APSoC is a perfect platform to test the computer vision algorithms both in software and in hardware within the same silicon.

The Computer Vision Evaluation Platform was designed with the focus on scalability, reusability and open source in nature. The software components are built ground up from available code repositories to support required hardware interfaces and software libraries for developing and testing computer vision applications. The hardware infrastructure was designed to support graphical user interface for real-time viewing of the application and to support the hardware acceleration.

The concept of accelerating OpenCV based application with Vivado HLS design flow and HLS OpenCV libraries leverages the use of APSoC to optimize the application by selectively accelerating the computation intensive algorithms, while still using the same application code for both hardware and software. Hence, the developer can concentrate on optimizing and deriving new designs, staying on the algorithmic level in design process.

Popular edge detection algorithms, which form the basis of computer vision application, failed to perform with considerable frame rate when executed in the ARM core. Synthesizing them into hardware lead to a significant performance increase of the overall design.

The appropriate symbiosis between hardware and software is a key factor in designing performant applications. The detection of door handles showed the potential of the ZYNQ as a flexible platform for object recognition. The hardware takes over a major part of the processing whereas the software is responsible for decision based tasks. Optimizing the code of the software running on the ARM cores will increase the performance and the right choice of algorithms will lead to a more solid detector. These problems will be the topics for further developments.

Term

March 2015

June 2015

Contact

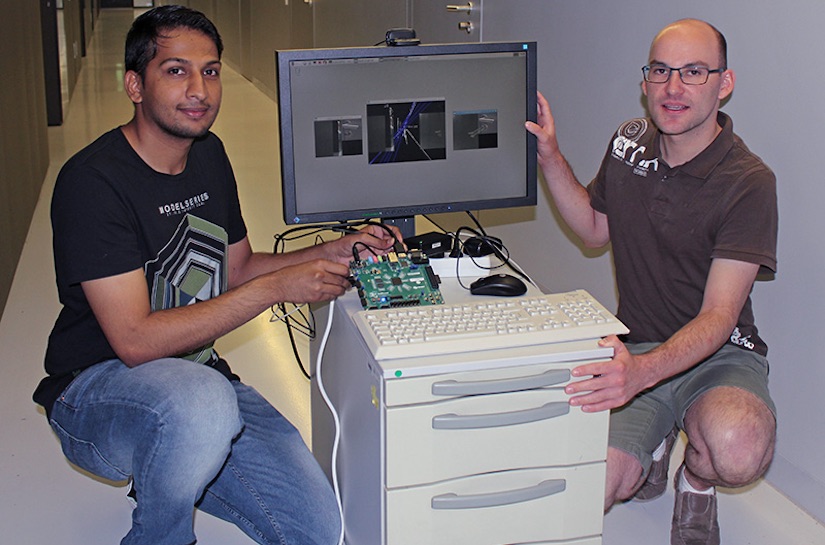

- Dipl.-Ing. Marcel Putsche

- Murali Padmanabha

- Christian Schott