Teaming agents

|

AI is imperfect and requires cooperation with humans, their support, assistence, and advice. In the area of human-machine teaming, we are investigating a framework how an AI agent could be designed in order to be able to solve even complex tasks in cooperation with humans. To deal with imperfection, an agent must be aware of the uncertainty of the environment and its decisions and, consequently, actively engage in communication with humans to overcome that uncertainty (Information Seeking). |

Framework for teaming agents

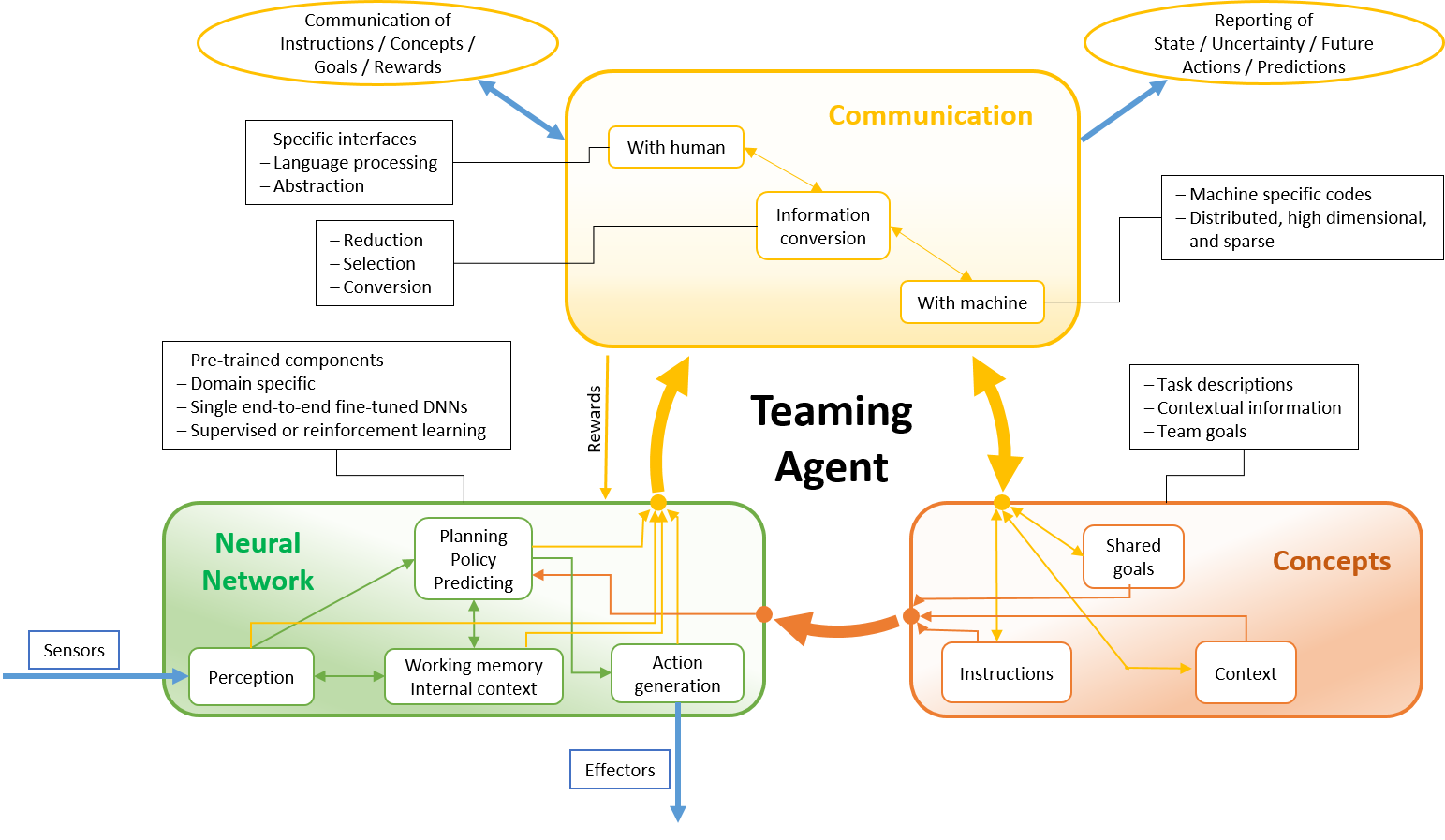

The rise of artificial agents that excel in complex tasks makes it feasible for humans and machines to form efficient teams. However, the design and requirements of the required software agent are still unclear. We identified important factors for human-machine teaming and characterize teaming situations by the presence of shared (sub-)goals, communication, interdependence, and the ability to learn and adapt. Given this, we introduced a framework for software teaming agents, which utilizes state-of-the-art deep neural networks and addresses how communication and conceptual information can be incorporated into such a design (Teichmann et al. 2023).

Information Seeking

Teaming with a human will lead in several occasions to unpredictable situations for the agent, as it will be difficult to embed the agent with a full theory of mind of its human partner. In situations where the agent is able to fully predict the dynamics of its environment, including the behavior and intentions of its partner, it can rely on its learned behavior and react to its sensory inputs in a straightforward and reactive manner. When the world is uncertain, the agent should rather spend more time looking for information rather than using its present knowledge which may lead to potentially severe errors. This requires the agent to anticipate its performance given its present input by means of internal monitoring of the likelihood of outcomes. As a result, the agent may start interacting with its partner in order to find out his/her intentions or what the current task is about. Thus, our goal is to enable machines with information-seeking behavior to reduce errors and improve their learning.

Selected Publications

Teichmann M., Vitay J., Ragni M., Gaedke M., Hamker F. H. (accepted).

Human-Machine Teaming Agents: A Future Perspective