Robot Vision

Organizational issues

| Term: | summer semester (2/1/1) |

| Requirements: | none |

| Exam: | oral |

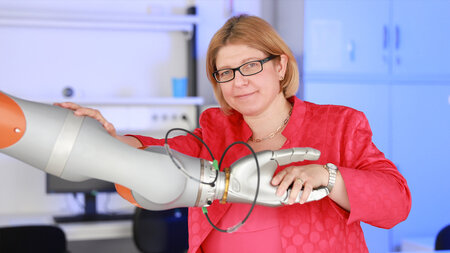

| lecturer: | Prof. Dr.-Ing. Ulrike Thomas |

| Sign up: | >>> OPAL |

| Timetable: | Link to the timetable of the TU |

Contents

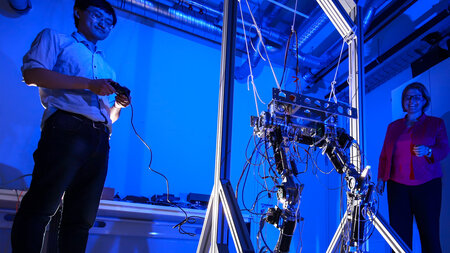

The lecture Robot Vision formerly 'Visual Servoing' deals with image processing for robotics. For robots it is important to interact with it environment, thus it is absolutely necessary for robots to perceive it environments. Visual perception plays a key role in that. The lecture deals with topics of image processing and hand-eye calibration as well as visual servoing. First, the camera model is explained. Afterwards, methods for image enhancement are presented. Feature recognition plays an important role for visual perception. Different techniques for feature detection are explained. Segmentation are applied in many image processing applications, many techniques are explained. Afterwards, we will deal with stereo vision and other methods such as shape from shading or depth perception by means of strip projections. An important part of the visual ability of robots is the tracking of objects in order to interact with them. Different tracking algorithms are presented. After we have worked out the basics of computer vision, we will deal with special visual-servoing methods.

Outline:

- Introduction and Basics

- Image Enhancement

- Recognition of various features

- Deep Imaging

- Tracking

- Establishment of Object in Room

- Visual Servoing

- Time for Questions

- Oral Examination

Literature

- Szeliski, R. (2010). Computer vision: algorithms and applications. Springer Science & Business Media.

- Hartley, R., & Zisserman, A. (2004). Multiple View Geometry in Computer Vision (2nd ed.). Cambridge: Cambridge University Press.

- Forsyth, D., & Ponce, J. (2011). Computer vision: A modern approach (p. 792). Prentice hall